Decomposing AI Development: A Practitioner's Guide to Navigate Decision-Making in AI System Development

Welcome to the second installment of 'AI Development Demystified' series for builders. After digesting this deep dive, you'll join the minority 1% who truly grasp the AI development landscape. No fluff, just hard-earned insights.

There's no shortage of information on building AI solutions. But most of it lacks nuance.

That's why I wanted to create a foundational resource for new data and AI professionals aiming to commit and gain practitioner-level knowledge.

In this second installment, you will:

Learn how to decompose AI development into its core components

Understand the interplay between the core components

Be able to demonstrate expert-level knowledge in workplace discussions that will earn you respect from the AI subject matter experts on the team

If you want to master the technical foundations of AI, this resource is for you.

About my AI background:

I've been in the trenches of AI development since 2018, way before ChatGPT made AI "cool" and became table-stakes in innovation.

As a product lead for Symbolic AI and Machine Learning (ML) based software products, I've led the development of multiple AI products from scratch.

My technical expertise spans NLP, deep learning, KR&R, ML lifecycle management, and big data processing frameworks (like Spark), applied to solving customer problems in domains of text summarization, speech, personalization, and large-Scale MLOps.

I've made many mistakes in my AI journey, and now you don't have to.

Here's what we'll cover in this guide:

The Three Technical Paradigms

Difference Between Symbolic AI, Adaptive AI, and Hybrid AI

Decomposing AI Development

The AI Development Framework

High-level Processes and Key Relationships

The Problem Domain of AI development

The Solution Domain of AI development

Framing the Mathematical Formula of AI Development

Example 1: Decomposing "Generative AI"

Example 2: Decomposing "Natural Language Processing (NLP)"

Decision-Making Guide for AI Development

Which AI Development Paradigm to Choose?

Which Learning Approach to Use?

Which Learning Strategy to Apply?

Which AI Model Architecture to Implement?

FAQs

Headsup: This is a deep dive. And unlike my other posts, my deep dives are optimized for knowledge share, not length. This is a resource for "builder" archetype individual contributors (ICs) and Leaders who want to go from a surface-level understanding to a seasoned practitioner-level understanding in one weekend.

💡 I recommend committing atleast 12 hours digesting this installment.

If you're serious about mastering the fundamentals of AI development, start with Part 1 of this series and work your way through to the end. Each installment builds on the last, providing you with the practical knowledge to discern reality from hype, separate fact from misinformation, and ultimately think and talk like a true AI practitioner.

Everyone's heard the golden rule of AI development: "Garbage IN, Garbage OUT."

An AI system becomes what it eats, quite literally. It lives and learns within its own data bubble. The larger and more diverse the bubble, the more "intelligent" it gets.

The boundaries of this data bubble are rapidly expanding. While storage costs have decreased, the sheer scale of data required for sophisticated AI systems keeps overall storage expenses significant. Similarly, while processing power has become more efficient, the computational demands of advanced AI models have significantly increased typical training and operational costs. For example, OpenAI's large language model GPT-3 was trained on 45TB of text data and the cost to train it was estimated to be around $4.6 million in 2020.

As covered in Part 1 of this series, "From Narrow AI to Superintelligence: What's the Difference and When Will We Get There?" the natural progression of AI's evolution journey is quite ambitious and we're only getting started. According to Stanford University's 2023 AI Index Report, private investment in AI reached $91.9 billion in 2022, while federal government investment in AI R&D in the U.S. is expected to reach $1.5 billion in 2025. These investments in AI demonstrate a strong commitment from both private and public sectors to push AI's potential for transformative impact.

We're on the brink of Narrow AI disrupting many industries, poised to transform how we think, work, and live in the coming decades. But:

How does one develop an AI solution?

What's a practical framework for approaching AI development?

What are the key decisions to be made, and what critical trade-offs must we consider in building AI systems that meet our goals?

In this second installment, we'll answer these questions by zooming out and in, zigging and zagging to provide both the big picture and essential details.

Let's dive into the trenches.

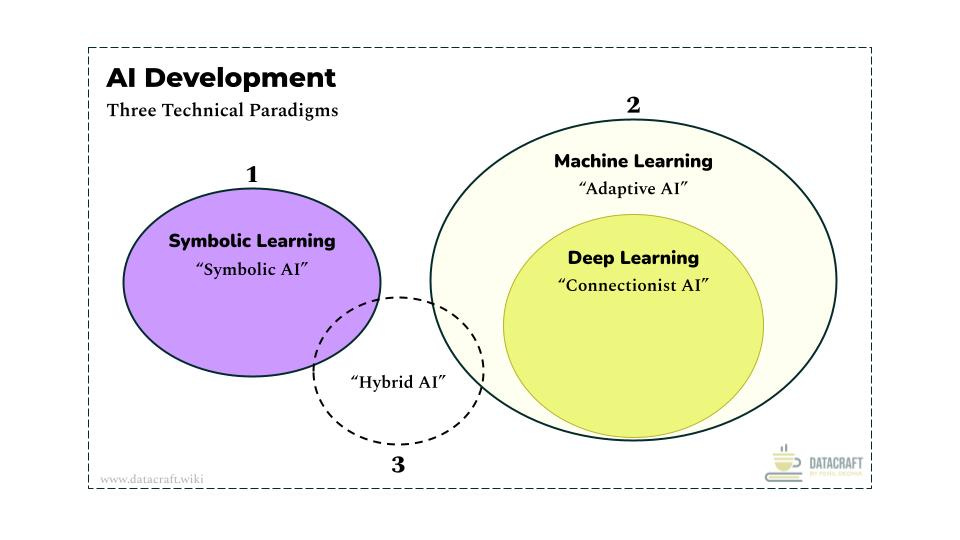

The Three Technical Paradigms

Understanding the foundational paradigms of AI is crucial for grasping the full spectrum of AI development.

Symbolic AI: Uses logic and knowledge representation

Adaptive AI: Learns from data and adapts to improved performance over time

Hybrid AI: Combines Symbolic and Adaptive approaches

Each paradigm has a set of different learning approaches that can be implemented in an AI system to help them gain new knowledge.

Most AI development guides jump past the basics and dive straight into specific, popular branches of AI, like the neural networks used in Deep Learning. However, the evolution of Narrow AI is progressing towards an era of Hybrid AI systems. This shift is driven by the growing importance of Explainable AI (XAI) and Ethical AI, both crucial for any hope of achieving Artificial General Intelligence (AGI).

As a Data and AI practitioner, you need a working knowledge of all three technical paradigms. This isn't just necessary today—it's table stakes.

Difference Between Symbolic AI, Adaptive AI, and Hybrid AI

Symbolic AI, also known as Classical AI or Rule-Based AI or Good old fashioned AI (GOFAI), uses human-readable symbols and rules to represent knowledge and solve problems through logical reasoning. It relies on explicit programming of knowledge and rules, similar to how we use language or symbols in mathematics, making it particularly useful in domains where expert knowledge can be clearly codified.

This approach was the first official attempt at creating AI. It grew in popularity between the 1950s and 1980s.

Expert systems for medical diagnosis, early Interactive Voice Response (IVR) systems, automated theorem provers, and early chess-playing programs like DeepBlue are all examples of Symbolic AI.

Note that just the absence of adaptive learning alone is not sufficient to classify a AI system as a 'Symbolic AI' system. For example, a simple calculator operates on predefined rules and doesn't learn, but it's not considered AI. Similarly, Pac-Man isn't symbolic AI, despite its rule-based nature.

The main identifiers of Symbolic AI are:

Operates primarily based on predefined rules and knowledge representations

Has explicit, human-readable rules and knowledge bases

Has built-in capacity to explain its decision-making process, which can be accessed for troubleshooting or when deeper understanding of system's decisions or reasoning is needed

Adaptive AI (machine learning) is a type of AI that can learn from data and improve its performance over time without being explicitly programmed. It uses statistical techniques to find patterns in data and make decisions or predictions based on these patterns.

Under this technical paradigm, deep learning (or "Connectionist AI") is a specific Learning Method and Generative AI is an Application Domain. We'll understand these concepts in a little more depth in the upcoming sections.

Image and speech recognition systems, chatbots, recommendation engines, spam filters, and predictive maintenance systems in manufacturing are a few examples of Adaptive AI.

Hybrid AI combines elements of both Symbolic AI and Adaptive AI (machine learning). It aims to integrate the logical reasoning and explainability of Symbolic AI with the learning capabilities and flexibility of machine learning.

Self-driving cars, modern voice assistants like Siri and Alexa, humanoid robots, etc., are all good examples of Hybrid AI. They all involve ML-based systems to understand their surroundings (like recognizing objects, text, speech, etc.) and rule-based systems to make safe, predictable decisions.

🌟 Decomposing AI Development

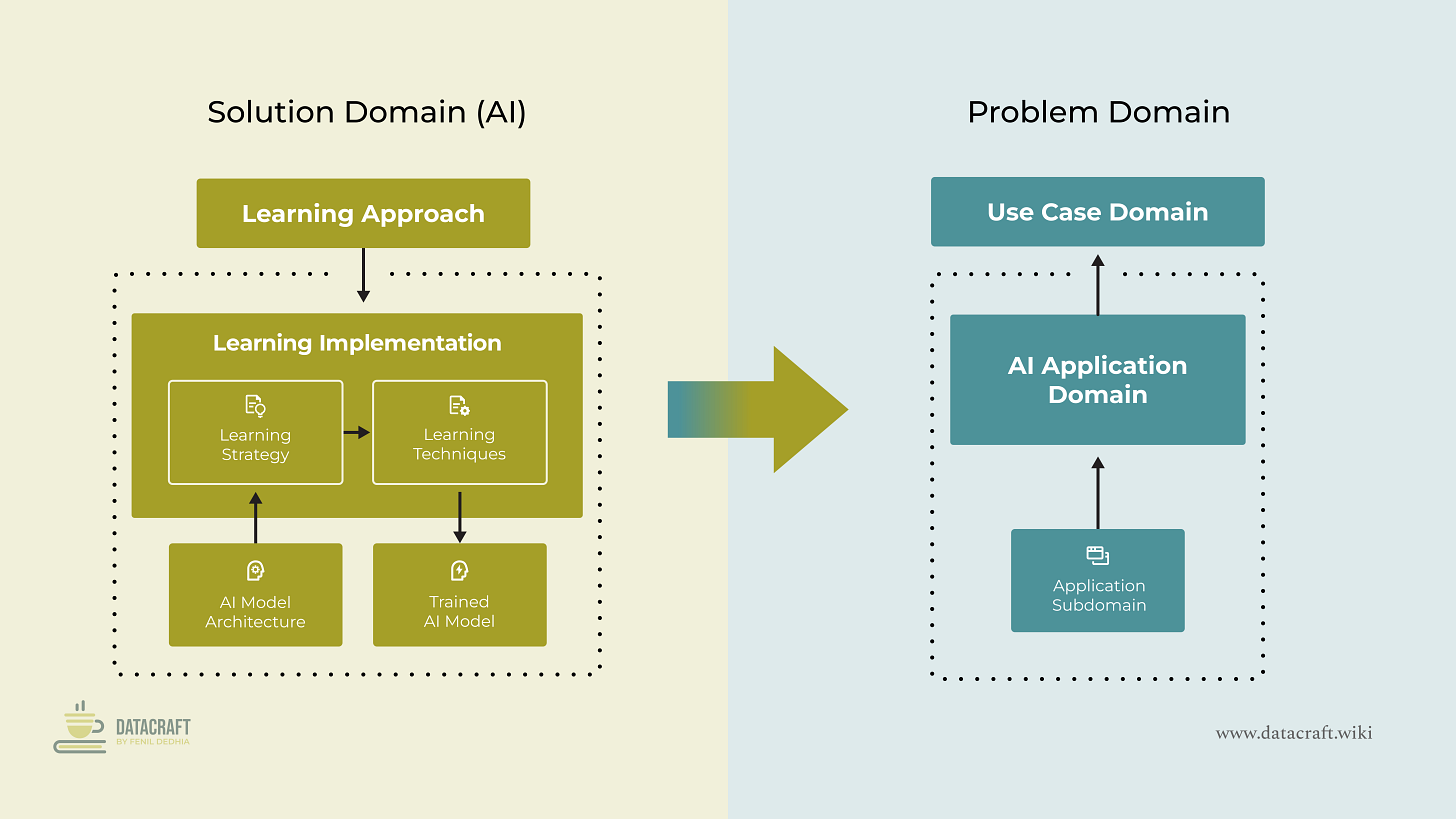

Decomposing the AI development into a core functional framework will help us visualize both the problem space and technical implementation, making it easier to systematically track the key decisions to be made and critical trade-offs to consider in building AI systems that meet our goals.

In the following sections, we'll first digest the key components of our AI development framework and illustrate its application through examples in Generative AI and Natural Language Processing.

The AI Development Framework

Important note: The terminology and definitions are critical to digest in leveraging this framework in the real world. The nomenclature is deliberate and carefully crafted. This is a section you might want to review multiple times to fully internalize properly.

Framework Domain

Problem Domain: Focuses on the "what" and the "why" of AI development, defining the context, requirements, and objectives.

Solution Domain (AI): Focuses on the "how" of AI development, encompassing the technical approaches, methods, and models used to create an AI solution that addresses the Problem Domain.

Learning Approach: This is the overall process or structure of "how" the learning happens. The learning approach answers the fundamental question: How will the AI system learn from the available data?

Learning Implementation: The process of implementing the learning approach to develop a tailored AI solution for a particular application domain or use case domain

Learning Strategy: The specific methodology or strategy chosen to implement the Learning Approach

Learning Techniques: The specific techniques or algorithms used to execute the Learning Strategy

AI Model Architecture: The architectural blueprint of "how" an AI model processes data to learn, aka the model architecture "type"

Trained AI Model: The outcome of applying learning strategies and techniques to an AI model architecture, resulting in a model that is fine-tuned for a specific application domain or use case domain.

Application Domain (AI): The specialized areas within AI that drive the development of new AI models. It represents both broad AI fields such as Generative AI, NLP, Autonomous Systems, Explainable AI (XAI), and others

Use Case Domain: The real-world problems to which AI solutions are applied. It represents the concrete application of AI — the specific scenarios, tasks, or problems. Use Case Domains are generally industry-agnostic, but can be industry-specific when unique tasks or problems warrant an AI solution

High-level Processes and Key Relationships

This section is the key aha moment. Are you ready?

The Learning Approach, such as supervised or unsupervised learning, dictates how learning will be executed through Learning Implementation and is the first decision to make in the AI development process.

After selecting the Learning Approach, Learning Implementation begins, involving the selection of the Learning Strategy, Learning Techniques, and AI Model Architecture to create a tailored AI solution.

The Learning Strategy is chosen to align with the Learning Approach and the target application domain or use case domain, defining which strategy or methodology, such as deep learning or transfer learning, will be used to optimize the learning process.

As part of the Learning Strategy, the AI Model Architecture, such as CNNs or Transformers, is selected based on the type of available data and target task, serving as the blueprint for how the model processes data.

Once the Learning Strategy and AI Model Architecture are in place, Learning Techniques, including optimization algorithms or backpropagation, are applied to train and fine-tune the model.

The result of the Learning Implementation process is the Trained AI Model, a model that has been optimized using the chosen strategies, techniques, and architecture, and is now tailored to the Application Domain and Use Case Domain.

The Trained AI Model is then deployed within a specific Application Domain, such as NLP or Computer Vision, to address broader AI fields relevant to the problem at hand.

Finally, the Trained AI Model, by itself or part of a larger AI solution, is applied to real-world Use Case Domains, solving specific problems like medical diagnosis assistance, or code generation.

🔑 You've completed the foundation section!

What's ahead: We're about to explore the Problem and Solution domains of AI development - the core concepts that will help you connect all those buzzwords you've been hearing. Get ready to see how everything fits together in the AI landscape.